Evaluate an agent

In this tutorial, we'll build a customer support bot that helps users navigate a digital music store. We'll create three types of evaluations:

- Final response: Evaluate the agent's final response.

- Single step: Evaluate any agent step in isolation (e.g., whether it selects the appropriate first tool for a given ).

- Trajectory: Evaluate whether the agent took the expected path (e.g., of tool calls) to arrive at the final answer.

We'll build our agent using LangGraph, but the techniques and LangSmith functionality shown here are framework-agnostic.

Setup

Configure the environment

Let's install the required dependencies:

pip install -U langgraph langchain langchain-community langchain-openai

and set up our environment variables for OpenAI and LangSmith:

import getpass

import os

def _set_env(var: str) -> None:

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"Set {var}: ")

os.environ["LANGCHAIN_TRACING_V2"] = "true"

_set_env("LANGCHAIN_API_KEY")

_set_env("OPENAI_API_KEY")

Download the database

We will create a SQLite database for this tutorial. SQLite is a lightweight database that is easy to set up and use.

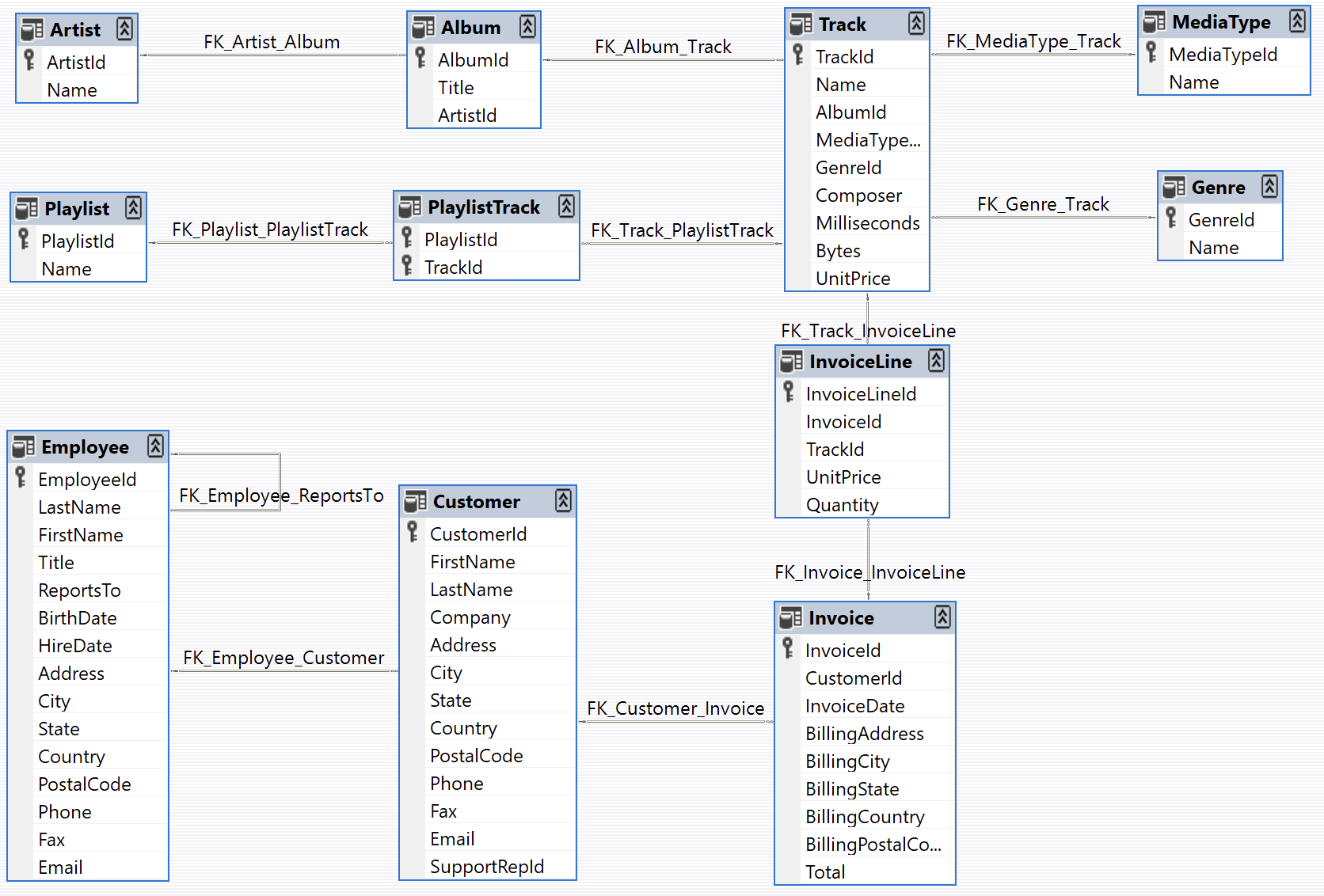

We will load the chinook database, which is a sample database that represents a digital media store.

Find more information about the database here.

For convenience, we have hosted the database in a public GCS bucket:

import requests

url = "https://storage.googleapis.com/benchmarks-artifacts/chinook/Chinook.db"

response = requests.get(url)

if response.status_code == 200:

# Open a local file in binary write mode

with open("chinook.db", "wb") as file:

# Write the content of the response (the file) to the local file

file.write(response.content)

print("File downloaded and saved as Chinook.db")

else:

print(f"Failed to download the file. Status code: {response.status_code}")

Here's a sample of the data in the db:

import sqlite3

conn = sqlite3.connect("chinook.db")

cursor = conn.cursor()

# Fetch all results

cursor.execute("SELECT * FROM Artist LIMIT 10;").fetchall()

[(1, 'AC/DC'), (2, 'Accept'), (3, 'Aerosmith'), (4, 'Alanis Morissette'), (5, 'Alice In Chains'), (6, 'Antônio Carlos Jobim'), (7, 'Apocalyptica'), (8, 'Audioslave'), (9, 'BackBeat'), (10, 'Billy Cobham')]

And here's the database schema (image from https://github.com/lerocha/chinook-database):

Define the customer support agent

We'll create a LangGraph agent with limited access to our database. For demo purposes, our agent will support two basic types of requets:

- Lookup: The customer can look up song titles based on other information like artist and album names.

- Refund: The customer can request a refund on their past purchases.

For the purpose of this demo, we'll model a "refund" by just deleting a row from our database. We won't worry about things like user auth for the sake of this demo. We'll implement both of these functionalities as subgraphs that a parent graph routes to.

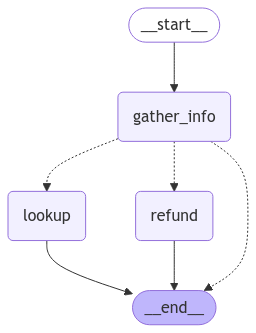

Refund agent

First we'll write some SQL helper functions:

import sqlite3

def _refund(invoice_id: int | None, invoice_line_ids: list[int] | None, mock: bool = False) -> float: ...

def _lookup( ...

And now we can define our agent

import json

from langchain.chat_models import init_chat_model

from langchain_core.runnables import RunnableConfig

from langgraph.graph import END, StateGraph

from langgraph.graph.message import AnyMessage, add_messages

from langgraph.types import Command, interrupt

from tabulate import tabulate

from typing_extensions import Annotated, TypedDict

class State(TypedDict):

"""Agent state."""

messages: Annotated[list[AnyMessage], add_messages]

followup: str | None

invoice_id: int | None

invoice_line_ids: list[int] | None

customer_first_name: str | None

customer_last_name: str | None

customer_phone: str | None

track_name: str | None

album_title: str | None

artist_name: str | None

purchase_date_iso_8601: str | None

gather_info_instructions = """You are managing an online music store that sells song tracks. \

Customers can buy multiple tracks at a time and these purchases are recorded in a database as \

an Invoice per purchase and an associated set of Invoice Lines for each purchased track.

Your task is to help customers who would like a refund for one or more of the tracks they've \

purchased. In order for you to be able refund them, the customer must specify the Invoice ID \

to get a refund on all the tracks they bought in a single transaction, or one or more Invoice \

Line IDs if they would like refunds on individual tracks.

Often a user will not know the specific Invoice ID(s) or Invoice Line ID(s) for which they \

would like a refund. In this case you can help them look up their invoices by asking them to \

specify:

- Required: Their first name, last name, and phone number.

- Optionally: The track name, artist name, album name, or purchase date.

If the customer has not specified the required information (either Invoice/Invoice Line IDs \

or first name, last name, phone) then please ask them to specify it."""

class PurchaseInformation(TypedDict):

"""All of the known information about the invoice / invoice lines the customer would like refunded. Do not make up values, leave fields as null if you don't know their value."""

invoice_id: int | None

invoice_line_ids: list[int] | None

customer_first_name: str | None

customer_last_name: str | None

customer_phone: str | None

track_name: str | None

album_title: str | None

artist_name: str | None

purchase_date_iso_8601: str | None

followup: Annotated[

str | None,

...,

"If the user hasn't enough identifying information, please tell them what the required information is and ask them to specify it.",

]

info_llm = init_chat_model("gpt-4o-mini").with_structured_output(

PurchaseInformation, method="json_schema", include_raw=True

)

async def gather_info(state: State) -> Command[Literal["lookup", "refund", END]]:

info = await info_llm.ainvoke(

[

{"role": "system", "content": gather_info_instructions},

*state["messages"],

]

)

parsed = info["parsed"]

if any(parsed[k] for k in ("invoice_id", "invoice_line_ids")):

goto = "refund"

elif all(

parsed[k]

for k in ("customer_first_name", "customer_last_name", "customer_phone")

):

goto = "lookup"

else:

goto = END

update = {"messages": [info["raw"]], **parsed}

return Command(update=update, goto=goto)

def refund(state: State, config: RunnableConfig):

# whether to mock the deletion. True if the configurable var 'env' is set to 'test'.

mock = config.get("configurable", {}).get("env", "prod") == "test"

refunded = _refund(

invoice_id=state["invoice_id"], invoice_line_ids=state["invoice_line_ids"], mock=mock

)

response = f"You have been refunded a total of: ${refunded:.2f}. Is there anything else I can help with?"

return {

"messages": [{"role": "assistant", "content": response}],

"followup": response,

}

def lookup(state: State) -> dict:

args = (

state[k]

for k in (

"customer_first_name",

"customer_last_name",

"customer_phone",

"track_name",

"album_title",

"artist_name",

"purchase_date_iso_8601",

)

)

results = _lookup(*args)

if not results:

response = "We did not find any purchases associated with the information you've provided. Are you sure you've entered all of your information correctly?"

followup = response

else:

response = f"Which of the following purchases would you like to be refunded for?\n\n```json{json.dumps(results, indent=2)}\n```"

followup = f"Which of the following purchases would you like to be refunded for?\n\n{tabulate(results, headers='keys')}"

return {

"messages": [{"role": "assistant", "content": response}],

"followup": followup,

"invoice_line_ids": [res["invoice_line_id"] for res in results],

}

graph_builder = StateGraph(State)

graph_builder.add_node(gather_info)

graph_builder.add_node(refund)

graph_builder.add_node(lookup)

graph_builder.set_entry_point("gather_info")

graph_builder.add_edge("lookup", END)

graph_builder.add_edge("refund", END)

refund_graph = graph_builder.compile()

# Assumes you're in an interactive Python environment

from IPython.display import Image, display

display(Image(refund_graph.get_graph(xray=True).draw_mermaid_png()))

Lookup agent

For the lookup (i.e. question-answering) agent, we'll use a simple ReACT architecture and give the agent tools for looking up track names, artist names, and album names based on the filter values of the other two. For example, you can look up albums by a particular artist, artists that released songs with a specific name, etc.

from langchain.embeddings import init_embeddings

from langchain_core.tools import tool

from langchain_core.vectorstores import InMemoryVectorStore

from langgraph.prebuilt import create_react_agent

def index_fields() -> tuple[InMemoryVectorStore, InMemoryVectorStore, InMemoryVectorStore]: ...

track_store, artist_store, album_store = index_fields()

@tool

def lookup_track( ...

@tool

def lookup_album( ...

@tool

def lookup_artist( ...

qa_llm = init_chat_model("claude-3-5-sonnet-latest")

qa_graph = create_react_agent(qa_llm, [lookup_track, lookup_artist, lookup_album])

display(Image(qa_graph.get_graph(xray=True).draw_mermaid_png()))

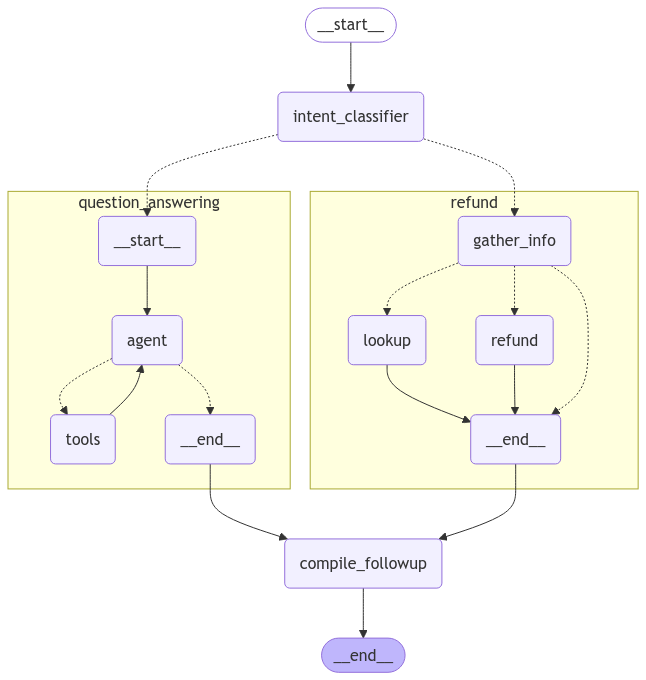

Parent agent

Now let's define a parent agent that combines our two task-specific agents. The only job of the parent agent is to route to one of the sub-agents by classifying the user's current intent.

class UserIntent(TypedDict):

"""The user's current intent in the conversation"""

intent: Literal["refund", "question_answering"]

router_llm = init_chat_model("gpt-4o-mini").with_structured_output(

UserIntent, method="json_schema", strict=True

)

route_instructions = """You are managing an online music store that sells song tracks. \

You can help customers in two types of ways: (1) answering general questions about \

tracks sold at your store, (2) helping them get a refund on a purhcase they made at your store.

Based on the following conversation, determine if the user is currently seeking general \

information about song tracks or if they are trying to refund a specific purchase.

Return 'refund' if they are trying to get a refund and 'question_answering' if they are \

asking a general music question. Do NOT return anything else. Do NOT try to respond to \

the user.

"""

async def intent_classifier(

state: State,

) -> Command[Literal["refund", "question_answering"]]:

response = router_llm.invoke(

[{"role": "system", "content": route_instructions}, *state["messages"]]

)

return Command(goto=response["intent"] + "_agent")

def compile_followup(state):

if not state.get("followup"):

return {"followup": state["messages"][-1].content}

return {}

graph_builder = StateGraph(State)

graph_builder.add_node(intent_classifier)

graph_builder.add_node("refund_agent", refund_graph)

graph_builder.add_node("question_answering_agent", qa_graph)

graph_builder.add_node(compile_followup)

graph_builder.set_entry_point("intent_classifier")

graph_builder.add_edge("refund", "compile_followup")

graph_builder.add_edge("question_answering", "compile_followup")

graph_builder.add_edge("compile_followup", END)

graph = graph_builder.compile()

We can visualize our compiled parent graph including all of its subgraphs:

display(Image(graph.get_graph().draw_mermaid_png()))

Try it out

state = await graph.ainvoke(

{"messages": [{"role": "user", "content": "what james brown songs do you have"}]}

)

print(state["followup"])

I found 20 James Brown songs in the database, all from the album "Sex Machine". Here they are: ...

state = await graph.ainvoke({"messages": [

{

"role": "user",

"content": "my name is Aaron Mitchell and my number is +1 (204) 452-6452. I bought some songs by Led Zeppelin that i'd like refunded",

}

]})

print(state["followup"])

Which of the following purchases would you like to be refunded for? ...

Evaluations

Agent evaluation can focus on at least 3 things:

- Final response: The inputs are a prompt and an optional list of tools. The output is the final agent response.

- Single step: As before, the inputs are a prompt and an optional list of tools. The output is the tool call.

- Trajectory: As before, the inputs are a prompt and an optional list of tools. The output is the list of tool calls

Create a dataset

First, create a dataset that evaluates end-to-end performance of the agent. We'll use this for final response and trajectory evaluation, so we'll add the relevant labels:

from langsmith import Client

client = Client()

# Create a dataset

examples = [

{

"question": "How many songs do you have by James Brown",

"response": "We have 20 songs by James Brown",

"trajectory": ["question_answering_agent", "lookup_tracks"]

},

{

"question": "My name is Aaron Mitchell and I'd like a refund.",

"response": "I need some more information to help you with the refund. Please specify your phone number, the invoice ID, or the line item IDs for the purchase you'd like refunded.",

"trajectory": ["refund_agent"],

},

{

"question": "My name is Aaron Mitchell and I'd like a refund on my Led Zeppelin purchases. My number is +1 (204) 452-6452",

"response": 'Which of the following purchases would you like to be refunded for?\n\n invoice_line_id track_name artist_name purchase_date quantity_purchased price_per_unit\n----------------- -------------------------------- ------------- ------------------- -------------------- ----------------\n 267 How Many More Times Led Zeppelin 2009-08-06 00:00:00 1 0.99\n 268 What Is And What Should Never Be Led Zeppelin 2009-08-06 00:00:00 1 0.99',

"trajectory": ["refund_agent", "lookup"],

},

{

"question": "Who recorded Wish You Were Here again? What other albums of there's do you have?",

"response": "Wish You Were Here is an album by Pink Floyd",

"trajectory": ["question_answering_agent", "lookup_album"],

},

{

"question": "I want a full refund for invoice 237",

"response": "You have been refunded $2.97.",

"trajectory": ["refund_agent", "refund"],

},

]

dataset_name = "Chinook Customer Service Bot: E2E"

if not client.has_dataset(dataset_name=dataset_name):

dataset = client.create_dataset(dataset_name=dataset_name)

client.create_examples(

inputs=[{k: v} for k, v in examples.items() if k in ("question",)],

outputs=[{k: v} for k, v in examples.items() if k in ("response", "trajectory")],

dataset_id=dataset.id

)

Final response and trajectory evaluators

We can evaluate how well an agent does overall on a task. This involves treating the agent as a black box and just evaluating whether it gets the job done or not.

We'll create a custom LLM-as-judge evaluator that uses another model to compare our agent's output to the dataset reference output, and judge if they're equivalent or not:

# Prompt

grader_instructions = """You are a teacher grading a quiz.

You will be given a QUESTION, the GROUND TRUTH (correct) RESPONSE, and the STUDENT RESPONSE.

Here is the grade criteria to follow:

(1) Grade the student responses based ONLY on their factual accuracy relative to the ground truth answer.

(2) Ensure that the student response does not contain any conflicting statements.

(3) It is OK if the student response contains more information than the ground truth response, as long as it is factually accurate relative to the ground truth response.

Correctness:

True means that the student's response meets all of the criteria.

False means that the student's response does not meet all of the criteria.

Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct."""

# Output schema

class Grade(TypedDict):

"""Compare the expected and actual answers and grade the actual answer."""

reasoning: Annotated[str, ..., "Explain your reasoning for whether the actual response is correct or not."]

is_correct: Annotated[bool, ..., "True if the student response is mostly or exactly correct, otherwise False."]

# LLM with structured output

grader_llm = init_chat_model("gpt-4o-mini", temperature=0).with_structured_output(Grade, method="json_schema", strict=True)

# Evaluator

async def final_answer_correct(inputs: dict, outputs: dict, reference_outputs: dict) -> bool:

"""Evaluate if the final response is equivalent to reference response."""

user = f"""QUESTION: {inputs['question']}

GROUND TRUTH RESPONSE: {reference_outputs['response']}

STUDENT RESPONSE: {outputs['response']}"""

grade = await grader_llm.ainvoke([{"role": "system", "content": grader_instructions}, {"role": "user", "content": user}])

return grade["is_correct"]

The more complex your agent, the more possible steps it could fail at. In such cases, it can be values to come up with non-binary evaluations that give your agent partial credit for taking some correct steps even if it doesn't get to the correct final answer. Trajectory evaluations make it easy to do this — we can compare the actual sequence of steps the agent took to the desired sequence of steps, and score it based on the number of correct steps it took. In this case, we've defined our end-to-end dataset to include an ordered subsequence of steps we expect our agent to have taken. Let's write an evaluator that checks the actual agent trajectory to see if the desired subsequence occurred:

def trajectory_subsequence(outputs: dict, reference_outputs: dict) -> bool:

"""Check if the actual trajectory contains the desired subsequence."""

if len(reference_outputs['trajectory']) > len(outputs['trajectory']):

return False

i = j = 0

while i < len(reference_outputs['trajectory']) and j < len(outputs['trajectory']):

if reference_outputs['trajectory'][i] == outputs['trajectory'][j]:

i += 1

j += 1

return i == len(reference_outputs['trajectory'])

Now we can run our evaluation. Our evaluators assume that our target function returns a 'response' and 'trajectory' key, so lets define a function that does so

async def run_graph(inputs: dict) -> dict:

"""Run graph and track the trajectory it takes along with the final response."""

trajectory = []

final_response = None

async for namespace, chunk in graph.astream({"messages": [

{

"role": "user",

"content": inputs['question'],

}

]}, subgraphs=True, stream_mode="debug"):

if chunk['type'] == 'task':

trajectory.append(chunk['payload']['name'])

if chunk['payload']['name'] == 'tools' and chunk['type'] == 'task':

for tc in chunk['payload']['input']['messages'][-1].tool_calls:

trajectory.append(tc['name'])

elif chunk['type'] == "task_result" and "followup" in [res[0] for res in chunk['payload']['result']]:

final_response = next(res[1] for res in chunk['payload']['result'] if res[0] == "followup")

else:

continue

return {"trajectory": trajectory, "response": final_response}

experiment_prefix = "sql-agent-gpt4o"

metadata = {"version": "Chinook, gpt-4o base-case-agent"}

experiment_results = await client.aevaluate(

run_graph,

data=dataset_name,

evaluators=[final_answer_correct, trajectory_subsequence],

experiment_prefix=experiment_prefix,

num_repetitions=1,

metadata=metadata,

max_concurrency=4,

)

experiment_results.to_pandas()

Single step evaluators

While end-to-end tests give you the most signal about your agents performance, for the sake of debugging and iterating on your agent it can be helpful to pinpoint specific steps that are difficult and evaluate them directly.

A crucial part of our agent is that it routes the user's intention correctly into either the "refund" path or the "question answering" path. Let's create a dataset and run some evaluations to really stress test this one component.

examples = [

{"messages": [{"role": "user", "content": "i bought some tracks recently and i dont like them"}], "route": "refund_graph"},

{"messages": [{"role": "user", "content": "I was thinking of purchasing some Rolling Stones tunes, any recommendations?"}], "route": "question_answering_graph"},

{"messages": [{"role": "user", "content": "i want a refund on purchase 237"}, {"role": "assistant", "content": "I've refunded you a total of $1.98. How else can I help you today?"}, {"role": "user", "content": "did prince release any albums in 2000?"}], "route": "question_answering_graph"},

{"messages": [{"role": "user", "content": "i purchased a cover of Yesterday recently but can't remember who it was by, which versions of it do you have?"}], "route": "question_answering_graph"},

]

dataset_name = "Chinook Customer Service Bot: Intent Classifier"

if not client.has_dataset(dataset_name=dataset_name):

def correct(outputs: dict, reference_outputs: dict) -> dict:

"""Check if the agent chose the correct route."""

assert outputs.goto == reference_outputs["route"]

experiment_results = await client.aevalaute(

graph.nodes['intent_classifier'],

data=dataset_name,

evaluators=[correct],

experiment_prefix=experiment_prefix,

metadata=metadata

max_concurrency=4,

)

Reference code

Click to see a consolidated code snippet

foo